We may never be able to eradicate political violence like what is now seen in Ethiopia, or in the deaths and damage wrought around the Colombo region in Sri Lanka. As Plato noted, “Only the dead have seen the end of war”. But a more realistic goal may be within our grasp: if we can more clearly see when and where violence starts, we can better prepare for and mitigate the harm of conflicts.

Illustration: Leo Reynolds / Flickr. CC BY-NC-SA 2.0

Computing useful conflict predictions, however, remains daunting. Despite a decade of scientific progress that has tremendously increased our ability to predict the continuation of ongoing conflicts (and peace), even sophisticated models continue to be caught by surprise by changes in fatalities, for example when tensions intensify, leap between dis-contiguous regions, or de-escalate.

To break this log-jam, the ViEWS team adopted an unconventional approach, and ran a conflict prediction competition — the first of its kind in this domain. We invited researchers to predict the changes in — not just the level of — armed conflict fatalities over time, at two levels of analysis: the country-month, and a more disaggregated subnational unit-month in Africa (what we call ‘PRIO-GRID-month’). Our goal was to leverage the many-models effect, also known as the wisdom-of-the-crowd effect. In essence, on complex and difficult tasks, multiple distinct, even incoherent, perspectives may be necessary to improve knowledge.

Towards this end, we recruited teams from political science, economics, statistics, sociology, and related disciplines, and provided participants with the same set of targets to train their models on. By September 2020, contestants had to deliver their best predictions for the true future (at the time) — from October 2020 up to March 2021 — as well as another set of ‘back-cast’ predictions (2017-2019) that were used to further test models’ accuracy. By evaluating the models at least partly on how they performed in the future, we could separate illusions of predictability that are based on curve-fitting the past from the useful distillation of signals that reduce uncertainty about the future.

The forecasting models that the participants concocted exceeded our expectations. Each model leveraged new and unique insights on the targeted problem, including the development and use of new algorithms; the integration of textual information extracted from the news and internet queries; and the use of their own ensembling techniques — where a given entry themselves teamed machines together instead of relying on one model — to increase predictive performance of their entry. These contributions are available in the International Interactions Special Issue contents.

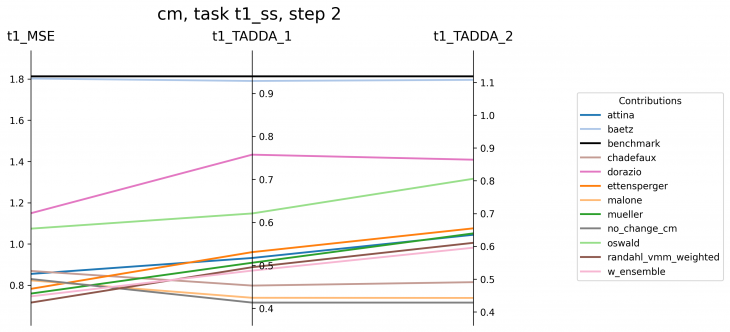

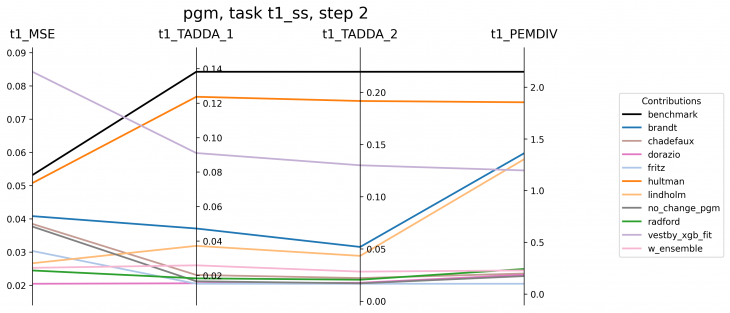

Figure 1. Summary of the predictive performance of the models for the true future set. Better models are closer to the bottom.

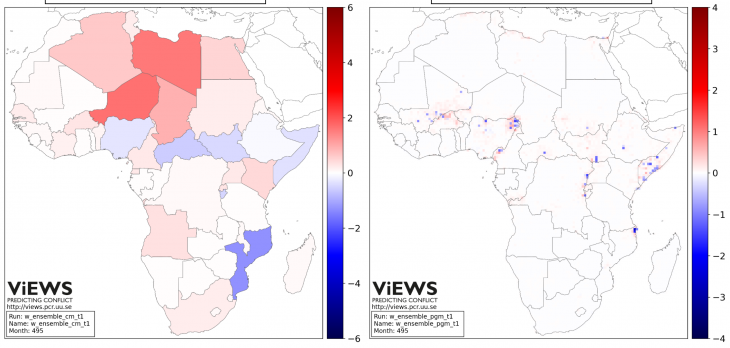

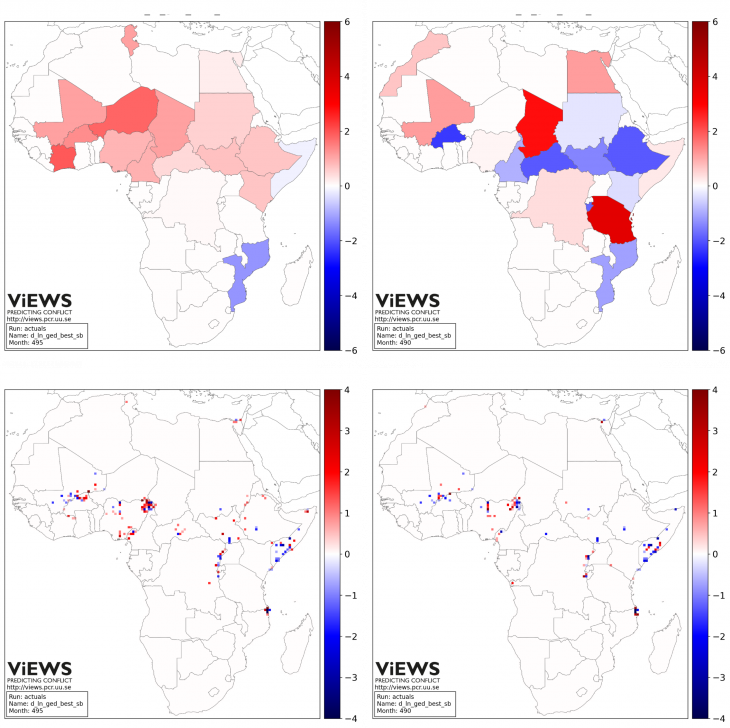

To capitalize on the ‘wisdom of the crowd’, we assembled predictions from all the models into an ensemble, or a group of diverse models. Our evaluation shows that the crowd is indeed wise: a crowd of diverse models produces an aggregate forecast (Figure 2 versus true observations presented in Figure 3) that is significantly better than the average individual set of predictions.

This is not only a methodological insight, suggesting that prediction competitions such as this one can become a staple of future conflict research; it is also a theoretically pivotal achievement, as it suggests that our current compartmentalized knowledge of conflict processes is only an incomplete and partial representation of a phenomenon that is interactive, multi-scale, dynamic, and complex.

Figure 2. Maps of predicted change in conflict fatalities from August 2020 through March 2021, country-month (left) and sub-national unit-month (right)

The evaluation of the models summarized in Figure 1 also taught us some valuable lessons on forecasting conflict.

First, conflicts tend to re-occur over time, and cluster in space, so much so that they may even ‘trap’ societies in a spiral of violence. Models that capture these temporal and spatial dependencies perform particularly well when predicting conflict in the future, even when they are based on a very parsimonious set of predictors.

Second, the prediction competition framed the unpredictability of conflict hypothesis in a new light. In the time-period that the competition ran, models could indeed learn to predict fatality changes better than a baseline model. Thus, some signals on the tasks we designed exist at the time-scale of months. That said, there still is likely to be a ceiling on model performance as conflicts are inherently uncertain.

Hence, we need to clearly spell out our expectations about the contours of what predictive performance means – accuracy in the direction of predictions (up, down, constant) versus in location or magnitude. Towards this end, we provided new performance metrics in the competition that take direction (TaDDA) and distance (pEMDiv) into account to augment conventional metrics such as mean squared error. In the future, these and other bespoke, socially-informed optimization criteria could be used to train machines to better match what policy-makers and humanitarian aid groups need.

Lastly, this competition provided new evidence that complex models using hundreds of parameters and sophisticated automatic tools are perhaps the best individual tools we currently have for predicting conflict fatalities. This serves as a both a challenge and an opportunity to political scientists and those in the policy-sphere. The challenge is that opaque, complex models need to be combined with investments in tools and visualizations that make the results interpretable, so that the findings can contribute to improved theories of conflict processes, and augment rather than replace decision-making by practitioners.

The opportunity is that these complex models, and ensembles of them even more so, are likely picking up a signal from the social world. While we might not yet know how to orchestrate the formal math to capture it in a principled, theoretical model, the existence of the signal operates like a fog horn, whose reception and simultaneous volume can be used to infer what is currently hidden.

Figure 3. Maps of observed change in fatalities from August 2020 to October 2020 (left), and March 2021 (right).

The authors

- Paola Vesco is a Post-Doctoral researcher at the Department of Peace and Conflict Research, Uppsala University

- Michael Colaresi is a Professor at the Department of Political Science, University of Pittsburgh

- Håvard Hegre is a Research Professor at the Peace Research Institute Oslo (PRIO)